Mastering Your Data Warehouse ETL Pipeline

The Power of Data Integration for Business Intelligence

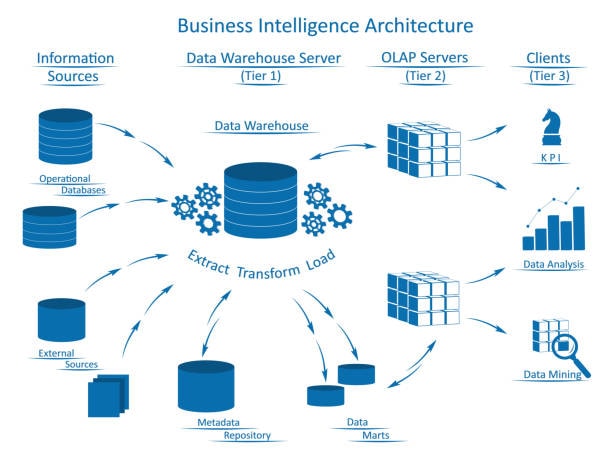

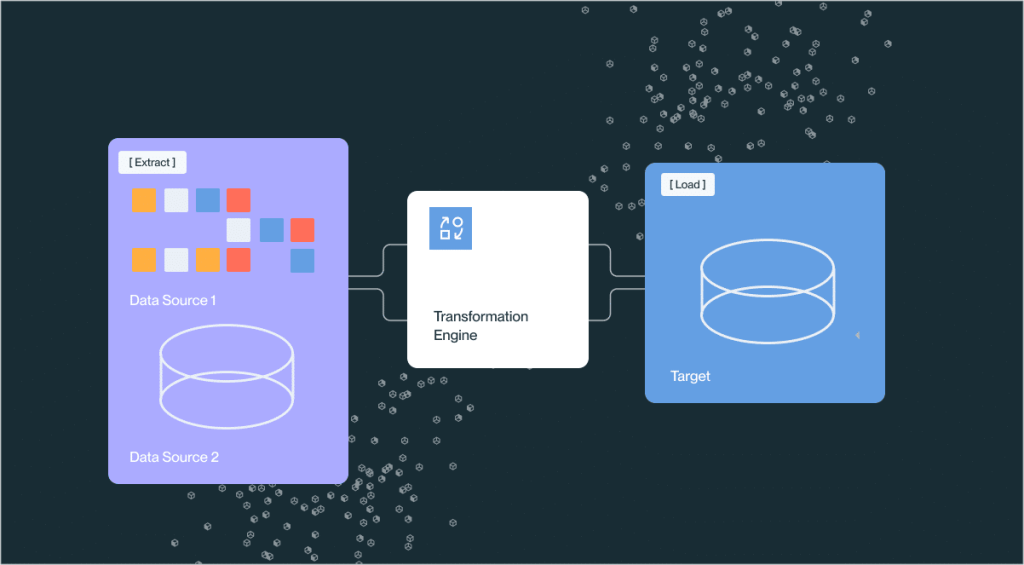

Mastering your data warehouse ETL pipeline is crucial for transforming raw data into valuable insights. A robust ETL process—encompassing data extraction, transformation, and loading—enables companies to consolidate data from multiple sources and drive accurate data analysis and business intelligence.

Understanding the Data Warehouse ETL Pipeline

A successful data warehouse ETL pipeline is the backbone of a powerful data integration process structured data, that supports accurate data analysis and business intelligence.

What is a Data Warehouse?

A data warehouse is a centralized repository source system where data from multiple sources is consolidated, processed, and stored for analysis.

It stores structured and unstructured data, allowing organizations to analyze data sets and derive valuable insights.

It is the target system for the entire extract, transform, load (ETL) process.

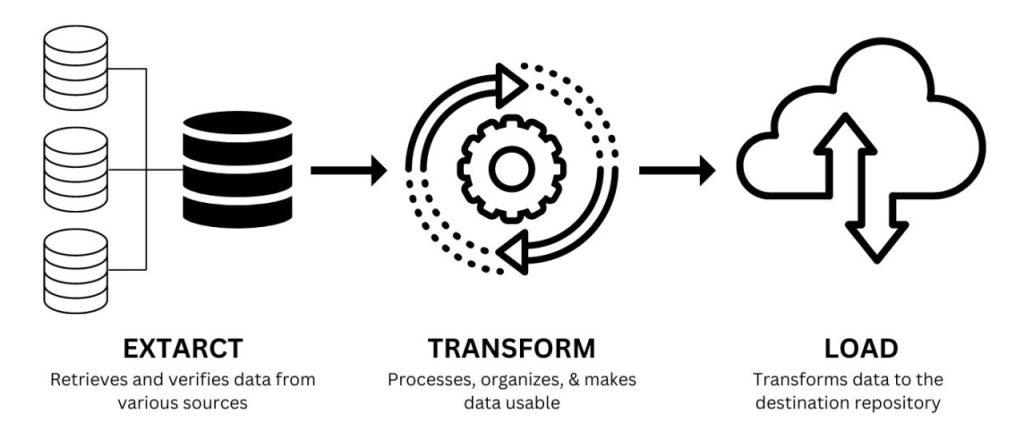

The Role of ETL in Data Integration

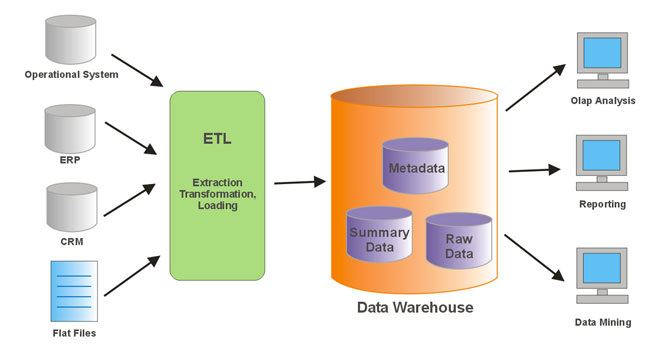

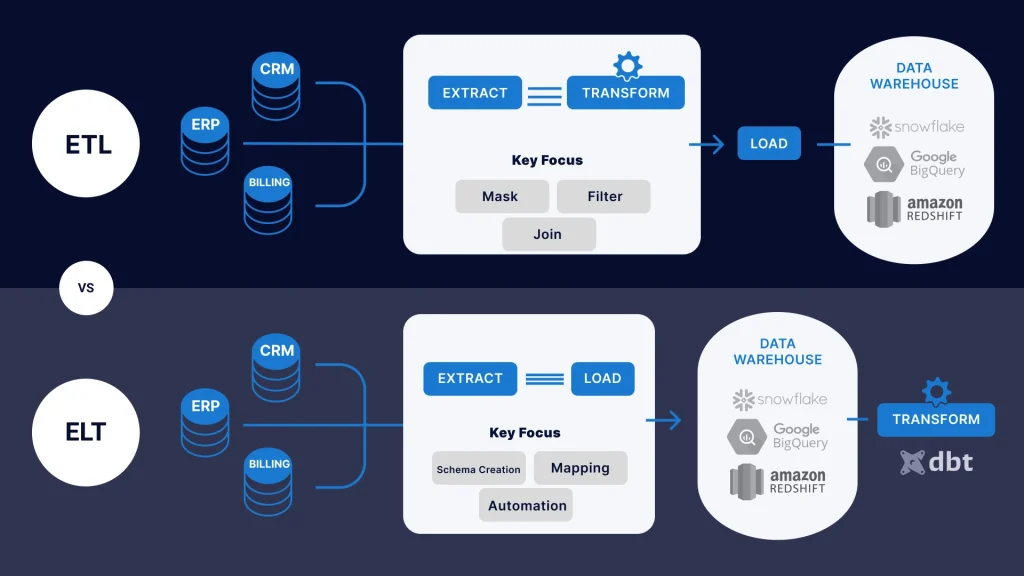

ETL is a critical component that manages the movement data integration tool and transformation of raw data from various data sources into the data warehouse.

The process called extract involves pulling raw data from multiple sources.

Data integration is the systematic process of integrating data from different systems into one unified view, which is essential for effective business analytics.

The Extract Process: Pulling Raw Data from Multiple Sources

Extracting data is the first step in the ETL data ingestion pipeline, where raw data is pulled from multiple sources.

Extracting Data: The First Step

The extraction process involves collecting raw data from internal systems, external sources, or unstructured data repositories.

This step ensures that all data, from transactional to behavioral data, is captured for further processing.

Dealing with Unstructured Data and Data Quality

Data extraction must handle different types of data and formats, including unstructured and duplicate data.

Ensuring data quality during extraction is critical, as missing values and low-quality data can affect the entire pipeline.

Overcoming Challenges: Duplicate Data and Missing Values

Data cleansing is an essential step during extraction to remove duplicate data and fill in missing values.

Advanced ETL tools can help identify and manage duplicate data and missing values, ensuring that only relevant, high-quality data is processed.

Data Transformation: Converting Raw Data into Usable Format

Data transformation is the core of the ETL process, extracting data from, converting raw data into a structured and usable format.

Understanding the Data Transformation Process

Data transformation involves converting raw data into a desired format using complex transformations and mapping techniques.

This process ensures that data from multiple sources is integrated and standardized, enabling accurate data analysis.

Complex Transformations and Data Mapping

Complex transformations may involve calculations, aggregations, and data cleansing to prepare transformed data for loading.

Data mapping converts data from one format to another, ensuring all data conforms to the required structure.

Data Cleansing and Format Conversion

Data cleansing removes inaccuracies and duplicated data and correcting missing values to improve data quality.

Format conversion ensures that all data is usable, making integrating it into the target system easier.

Loading Data: Integrating Transformed Data into the Target System

Once data from one system is transformed, it must be loaded into the data warehouse for analysis.

The Loading Process: From Data Lake to Data Warehouse

Loading data involves transferring the transformed data into a target system, such as a data warehouse or data lake, where it can be analyzed.

The process ensures that all transformed data is consolidated into one system for a unified view.

Ensuring Accurate Data Analysis and Business Intelligence

Accurate data analysis depends on the quality of data loaded into the data warehouse.

A robust loading process ensures that the final data sets are reliable, complete, and error-free, supporting advanced business intelligence and analytics tools.

Key Technologies and Tools for ETL Pipelines

Leveraging the right technology and data models is critical for building an effective ETL pipeline.

Data Integration Solutions and ETL Tools

Use a comprehensive data integration solution to consolidate data from multiple sources.

The right ETL tool facilitates the entire process—from extracting raw data to loading it into the target system.

Leveraging Data Virtualization and Cloud Storage

Data virtualization allows data teams to access and integrate data without physically moving it, streamlining the integration process.

Cloud storage solutions provide scalable and flexible options for raw and transformed data.

Advanced Analytics Tools and Machine Learning Algorithms

Incorporate analytics tools to process and analyze data in real-time.

Machine learning algorithms can automate parts of the data transformation process, ensuring accurate data analysis and valuable insights.

The Role of Data Engineers and Data Scientists in ETL

Data engineers and scientists are critical in designing, implementing, and maintaining an effective ETL pipeline of data formats.

Importance of Data Engineering

Data engineers are responsible for building and maintaining the ETL pipeline, ensuring data integration processes run smoothly.

They focus on extracting data from multiple sources and effectively managing data migration and movement.

Collaboration with Data Scientists for Business Analytics

Data scientists work closely with data engineers to analyze the transformed data, using advanced analytics and machine learning to extract valuable insights.

Their collaboration ensures that the processed data supports business intelligence and drives informed decision-making.

Building a Unified View: Consolidating Data from Multiple Sources

A unified view of your data is essential for comprehensive business analytics and effective decision-making.

Creating a Unified Customer Profile through ETL

- Integrate data from multiple sources to create a single, unified customer profile.

- This process helps identify customers accurately and provides valuable insights for personalized marketing and customer relationship management.

Data Aggregation and Consolidation Techniques

Use data aggregation techniques to consolidate data sets and create a complete picture of your business processes.

Aggregated data from multiple sources can be processed and analyzed to drive better business insights and improve overall data quality.

Best Practices for ETL Pipeline Management

Managing data processing an ETL pipeline effectively requires adherence to best practices that ensure data quality and operational efficiency.

Streamlining the Data Integration Process

Simplify the data integration process using a well-structured ETL pipeline that efficiently extracts, transforms, and loads data.

Implement automation wherever possible to reduce manual intervention and improve speed.

Monitoring and Regular Reporting for Operational Efficiency

Regular monitoring and reporting are crucial for maintaining the health of your ETL pipeline.

Use analytics tools to track key performance indicators and ensure that data is processed accurately in real-time.

Managing Data Migration and Data Movement

Ensure smooth data migration by employing robust ETL tools that handle data movement seamlessly.

Address challenges related to data entry, duplicate data, and missing values through effective data cleansing techniques.

Ensuring Data Security and Compliance

Data security is paramount in any ETL process, especially when handling sensitive, data sets and complying with emerging data privacy regulations.

Protecting Sensitive Data during ETL

Implement advanced security measures to safeguard sensitive data throughout the ETL pipeline.

Ensure that data integration processes adhere to industry standards and protect personally identifiable information.

Compliance with Emerging Data Privacy Regulations

Stay current with emerging data privacy regulations to ensure your ETL process remains compliant.

Utilize secure data integration solutions and cloud storage to protect data integrity and maintain customer trust.

How LinkLumin Can Help with Your Data Warehouse ETL Pipeline

LinkLumin offers a comprehensive solution to streamline and optimize your ETL pipeline for better business intelligence and revenue growth.

Comprehensive Data Integration and ETL Solutions

Unified Platform: LinkLumin consolidates data from multiple sources into a single system, enabling seamless integration and real-time analytics.

ETL Optimization: With advanced ETL tools, LinkLumin automates data extraction, transformation, and loading, ensuring data quality and reducing manual effort.

Real-Time Data Analytics and Reporting Tools

Actionable Insights: Access real-time insights into data processing, allowing marketing and data teams to make data-driven decisions.

Robust Reporting: Generate comprehensive reports that track key performance metrics, helping you refine your strategies and improve operational efficiency.

Streamlining Your Data Transformation Process with LinkLumin

Data Cleansing and Mapping: Utilize LinkLumin’s tools to cleanse and map data, ensuring that transformed data is accurate and in the desired format.

Integration with Existing Systems: Seamlessly integrate LinkLumin with your current accounting systems, CRM, and other internal processes for a unified data management solution.

Future Trends in ETL and Data Integration

Staying ahead of technological trends is essential for maintaining a competitive edge in all types of data integration and analytics.

Emerging Data Integration Technologies

Advanced ETL Tools: New ETL tools offer more automation and advanced features to process data from multiple data sources easily.

Data Virtualization: Enhanced data virtualization techniques allow seamless data integration without physically moving data.

The Future of Machine Learning in ETL

Predictive Analytics: Machine learning algorithms are increasingly used to predict data trends and optimize ETL processes.

Enhanced Data Transformation: AI-driven automation will further streamline the extraction and transformation process, reducing errors and increasing data quality.

Innovations in Data Warehouse Solutions

Unified Data Platforms: Future innovations will enable even greater integration across diverse data sources, creating a more unified view of customer data.

Cloud-Based Solutions: The rise of cloud storage and cloud-based data warehouses will continue to drive flexibility and scalability in data integration.

Building a Robust ETL Pipeline for Business Intelligence

A robust ETL pipeline is essential for driving accurate data analysis and valuable business insights relevant data from.

The Role of a Data Warehouse in Business Intelligence

Centralized Repository: A data warehouse stores data from multiple sources, providing a complete picture for business analytics.

Integration with Analytics Tools: Use advanced analytics and machine learning algorithms to process data and drive actionable insights.

Data Quality and Consistency

Ensuring Data Quality: Implement data cleansing and transformation processes to remove duplicate data, correct missing values, and convert data into a usable format.

Accurate Data Analysis: High-quality, transformed data enables accurate data analysis and supports effective decision-making.

Key Considerations for Building an ETL Pipeline

When building key structuring your ETL pipeline, consider these key aspects to ensure efficiency and reliability.

Managing Multiple Data Sources

Data Ingestion: Efficiently ingest data from multiple sources, including structured and unstructured data.

Consolidation: Use a data integration tool to consolidate data into one system, ensuring a unified view of all data.

Ensuring the Right Data Transformation Process

Complex Transformations: Manage complex transformations with data mapping and format conversion.

Data Aggregation: Aggregate data effectively to provide a complete picture for business intelligence.

Choosing the Right ETL Tools and Technologies

ETL Tool Selection: Evaluate different ETL tools based on advanced features, ease of integration, and cost-effectiveness.

Integration System: Choose a system that integrates seamlessly with your existing technology stack, including accounting and CRM platforms.

Enhancing the Data Integration Process

Streamlining your data integration process is key to building a robust, ETL data pipeline pipeline.

Best Practices for Data Integration

Extract Transform Load (ETL): Follow a disciplined ETL process to extract data, transform it into the desired format, and load it into your target system.

Data Mapping: Use data mapping techniques to ensure data from multiple sources is accurately integrated.

Consolidating Data from Multiple Sources

Unified View: Create a unified customer profile by integrating data from various sources into one warehouse.

Data Aggregation Techniques: Implement techniques to aggregate data, ensuring a comprehensive view for analysis.

Optimizing Data Transformation and Loading

Data transformation and loading are critical stages in data entry into the ETL pipeline.

Data Transformation Process

Converting Raw Data: Transform raw data into a structured, usable format through complex transformations.

Data Cleansing: Remove duplicate data and fill in missing values to ensure data quality.

Loading Data into the Target System

Efficient Loading: Ensure the loading process is optimized to quickly transfer transformed data into the target system.

Accurate Data Analysis: A well-managed loading process supports accurate data analysis and drives business intelligence.

The Role of Data Quality in ETL Success

Ensuring high data quality is paramount to the success of pull data into your ETL pipeline.

Managing Data Quality

- Data Cleansing: Regularly cleanse data to remove errors and duplicate entries.

- Quality Assurance: Implement processes to monitor data quality, ensuring that only accurate and relevant data is loaded.

Achieving a Unified View

Consolidate Data: Integrate data from multiple sources and manage data quality to create a unified view.

Actionable Insights: High-quality data enables valuable insights for decision-making and business intelligence.

Utilizing Data Virtualization in Your ETL Pipeline

Data virtualization can enhance your ETL process by providing additional data and efficient access to data.

Benefits of Data Virtualization

Streamlined Access: Virtualization allows data teams to access data without physically moving it.

Integration Efficiency: It simplifies data integration from multiple sources into one unified system.

Enhancing Business Intelligence

Unified Data Access: Data virtualization supports real‑time analytics and improves overall data quality.

Cost Efficiency: Reduces the need for extensive data migration, saving time and reducing costs.

Testimonials

Johny

"LinkLumin's ETL pipeline has revolutionized our data processing. Our reports are now generated in real-time, allowing us to make faster, more informed decisions. The seamless integration with our data warehouse has been a game-changer!"

Emily Davis

"Managing multiple data sources was a nightmare before LinkLumin. Their ETL pipeline automates everything, ensuring clean, reliable data for our analytics team. Highly recommended!"

Michael Johnson

"The efficiency and scalability of LinkLumin’s ETL pipeline exceeded our expectations. We process large volumes of transactional data effortlessly, with no downtime. This has significantly improved our forecasting models."

Michael Smith

"With LinkLumin, our ETL workflows are fully optimized. The automation and error-handling capabilities have saved us countless hours of manual work. A must-have for any data-driven company!"

Lisa Taylor

"LinkLumin has simplified our data infrastructure. Their ETL pipeline provides accurate, up-to-date insights, helping us scale our operations with confidence. It's been a crucial part of our data strategy."

Unlock the Power of Your Data with LinkLumin’s ETL Pipeline!

Optimize your data processing, enhance analytics, and gain real-time insights with our seamless ETL solution. Get started today and transform the way you manage your data!

🔹 Schedule a Demo | 🔹 Get a Free Consultation | 🔹

Start Your ETL Journey today with LinkLumin!

FAQs

What is LinkLumin’s Data Warehouse ETL Pipeline?

LinkLumin’s ETL pipeline automates the process of extracting, transforming, and loading data from multiple sources into your data warehouse, ensuring consistency, accuracy, and scalability.

Which data sources can LinkLumin integrate with?

Our ETL pipeline connects with databases, cloud storage, APIs, CRM systems, e-commerce platforms, and more, making it easy to consolidate your data.

How does LinkLumin ensure data quality and reliability?

We implement advanced validation, deduplication, and error-handling mechanisms to ensure your data remains clean, structured, and ready for analysis.

Is LinkLumin’s ETL pipeline suitable for large-scale data processing?

Yes! Our pipeline is designed for high-volume data ingestion and transformation, ensuring seamless performance even with complex datasets.

How quickly can I set up and start using LinkLumin’s ETL pipeline?

Deployment is fast and hassle-free. Our team provides full support to integrate your data sources and optimize the ETL process for your business needs.